Making Llama More Accessible

Open-sourcing and releasing fullmoon

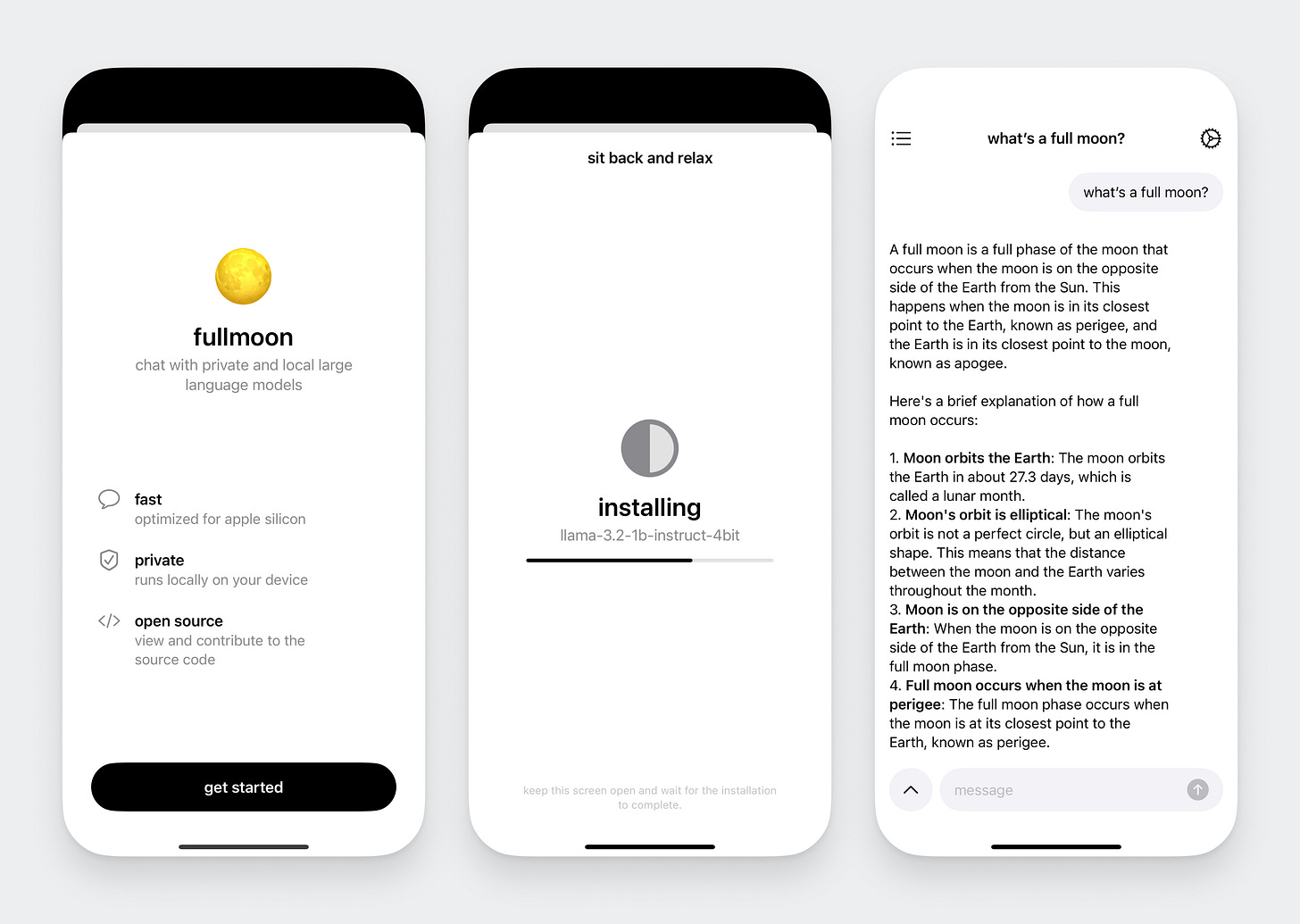

Today, Mainframe is open-sourcing and releasing an early beta preview of fullmoon, an experimental app to chat with private and local large language models.

With the release of Llama 3.2’s lightweight 1B and 3B models, the ability to run a highly capable model locally with privacy where data never leaves the device is now possible. With fullmoon, we want to bring the best experience for chatting with Llama to everyone, making it more accessible.

Running these models locally comes with two major advantages. First, prompts and responses can feel instantaneous, since processing is done locally. Second, running models locally maintains privacy by not sending data such as messages and calendar information to the cloud, making the overall application more private.

— Llama 3.2: Revolutionizing edge AI and vision with open, customizable models

fullmoon

fullmoon1 is an experimental iOS app to chat with Llama that’s optimized for Apple silicon and works on iPhone, iPad, and Mac. As of today, you can install and chat with Llama 3.2 1B and 3B models. Your chat history is saved locally, and you can customize the appearance of the app.

It’s available now on TestFlight as an early beta preview. Your feedback is welcomed and appreciated.

Open source

We’ve open-sourced the fullmoon iOS app on GitHub, built with SwiftUI. fullmoon is made possible by MLX Swift from Apple. MLX is an array framework for machine learning research on Apple silicon, brought to you by Apple machine learning research.

We’d love your help, support, and contributions in continuing to evolve fullmoon and making all current and future large language models that are optimized for on-device even more accessible.

You can find us @mainframe and mainfra.me. If you’d like to join us, email careers@mainfra.me.

The name fullmoon is a nod to “LLM”, or Large Language Model. (fuLLMoon)