Agent Clouds

A Conceptual Framework for Agent-Based Autonomous Software

Originally published October 25, 2023

In 2018, I imagined a world where the line between human intelligence and machine intelligence blurred entirely, and today that world is quickly taking shape. As 2023 draws to a close, this rapidly emerging arena of AI based autonomous agents will clearly be a highlight. But it’s only the very early stages of the concept. The ideas here represent the extension of this current landscape of isolated agents as they coalesce into AI Agent Clouds.

The future that this explores is one in which AI evolves to create autonomous, intelligent agents that interact and collaborate with each other to form complex networks. In the future, people will be at the center of a functional intelligence-interface layer — a cloud of autonomous AI agents, this is the concept of Agent Clouds.

These AI agents orbit the human nucleus, assuming menial tasks. They interact before we meet, take notes and even check back in after we part, and handle exchanges in between. The cloud is an amplifier of human capacity, making every individual a nucleus of their own unique intelligent cosmos.

Imagine each of us at the center of our own sphere of intelligent agents, that each enable us to make our own best decisions for ourselves. These AI agents don’t work for us; they collaborate, negotiate, and facilitate on our behalf, becoming extensions of ourselves. They’re busy planning and negotiating terms before we even walk into a meeting, and they continue to operate, analyze, and adapt long after we’ve moved on to new ventures.

Building on the vision I started imagining five years ago in a project called Richly Imagined Future, which developed into my contributions to the strategic foresight book Dynamic Future-Proofing by Alexander Manu, this framework further develops the nature of the collaboration and synergy between AI agents, data layers, and humans, ultimately depicting more nuanced interactions.

Instead of viewing interactions in the binary terms of machine-to-machine or machine-to-human, this framework focuses on the dynamic exchange of information and tasks within a network of agents, their associated data layers, and the people they support.

This is in part inspired by the recent development in autonomous software systems like AutoGPT, BabyAGI, Dot, (yes even PizzaGPT). Recent developments in local LLM services like Ollama and LM Studio, tools developed by Langchain, and research done by PlasticLabs on VoE.

2023 and the State of AI Agents

Autonomous agents can be thought of simply as applications that have the ability to think, respond, write and execute code, and autonomously execute tasks.

Given a goal articulated in natural language, it will do its best to achieve it by dissecting it into sub-tasks and exploring the internet and other tools in a recursive loop.

Centralized services like OpenAI are not the only choice for these tech stacks though. Open source language models like Llama 2 and Mistral can operate directly on local hardware through tools like Ollama and LM Studio, while LlamaIndex can pull relevant context from large datasets for effective context window management, all on-device, without relying on third-party private AI services.

Of course a tech company may create a solution or layer of technology that is more effective than any open-source system involving multiple open-source agents working together, but the open-source AI space is rapidly developing and very competitive. In a domain where local operation allows users to run open-source models on their own devices, local inference with open-source models may better support data ownership and individual sovereignty. In either case, 2023 seems to be the year these agents are beginning to walk.

2018 and the Richly Imagined Future

This echoes the vision I outlined in my Richly Imagined Future project in 2018, where the economy undergoes a transformation by the year 2038:

“Artificial intelligence, machine learning, and digital currencies enabled the machine economy to fully take over the role of labor in society”

In hindsight, these recent AI agents align with that idea that “machines were able to transact, and entirely new layers of the economy formed around machine-to-machine connectivity and exchange.”

Five years ago I imagined a future where our “appliances, phone, home, car… are run by self-executing smart contracts enabling them to operate a series of decentralized micro-businesses”, which begged the question:

“What happens when our machinery, our tools, and our products become financially and functionally autonomous?”

The following framework is an exploration into that same question, a redefinition of the interaction between people and autonomous applications. It is meant to inspire and provide structure to a complex and emerging field.

It ultimately revolves around the question: “what futures emerge in a scenario where artificial intelligence enables a fully autonomous and decentralized machine economy?”. It enables futures where labor shifts focus from the completion of manual tasks toward the deeper exploration of purpose, meaning, and creation.

Our Evolving Relationship with Machines

Before diving into the impact this transformation will have, it helps to look to history for some context (not for pattern prediction though lol).

Industrial machinery obsolesces much of the physical labor done at the time of their invention, which deeply impacted industries like farming, manufacturing, and as a result, the economy and our quality of life.

Domestic machinery obsolesces many time-consuming and demanding activities that were done by hand at the time of their development, altering the patterns of the home and society in positive ways.

Deterministic machinery (the “traditional computer”) obsolesces much of the arithmetic labor that was done by hand at the time of their invention; replacing spreadsheets with CSVs, text processors, calculators, etc.

Intelligent machinery will obsolesce menial intellectual tasks, the boundaries and definition of which has yet to fully emerge.

At every shift, our definition of machinery has advanced, and human capability has increased.

Routine Cognitive Tasks

Routine Cognitive Tasks refer to a subset of intellectual activities that, while requiring mental effort, primarily revolve around repetitive, procedural, or routine-based functions. Unlike the higher-order cognitive functions where human ingenuity, creativity, intent, and long-term strategic thinking are critical, and the purely arithmetic tasks that can be handled by traditional software, routine cognitive tasks are characterized by their predictability and repetitiveness, and their contribution to cognitive fatigue.

Consider these routine cognitive tasks:

Information Digestion

The sheer volume of information available today is becoming totally overwhelming. An LLM that can rapidly and effectively digest this vast amount of data into concise, personalized summaries ensures that you can stay well-informed, without the cognitive fatigue.

Budgeting

Managing personal finances is crucial, yet taxing. An LLM can relieve the cognitive load by handling the nuances of income, expenses, savings, and investments in ways the software we’re familiar with today couldn’t even imagine. By analyzing data, running exploratory data analysis, finding insights, making suggestions, filling out forms, checking over work, LLMs can help relieve so much of the complex, rudimentary tasks involved in staying on top of your finances.

Travel Planning

The dynamic nature of variables involved in route planning, like traffic and weather changes, can make this task daunting. LLMs can continuously learn and adapt from a vast array of data points to provide the most efficient and stress-free travel recommendations.

In this chat example, I worked with ChatGPT-4 with Code Interpreter (itself a component/primitive of their coming GPTs or Assistants) to emulate this process. I sent a request to plan an itinerary for me, my preference to avoid rain, and three files: a flight list of CDG airport in Paris, a document with the weather in Paris, and a list of indoor and outdoor places I want to visit.

It then uses Code Interpreter to import and examine the contents of the files…

It analyzes my request against the data in the files to find the driest 3 day period in my window…

And has put together an itinerary for the 3 driest days I can go to Paris that hits all the landmarks. It didn’t pick up on the request for tickets I was hoping it would, but I wasn’t very clear. With another prompt it would dig into those and help select tickets etc. This is just a simple example of the type of things that will soon be handled entirely by autonomous software.

Comparison Shopping

Product listings and prices change all the time, especially in ecommerce. LLMs can scan multiple platforms in real-time to find the best deals, do produce research, or ensure that choices meet needs, without as much hassle in terms of manual research.

Scheduling Meetings

In professional settings, scheduling can become a game of calendar Tetris, especially when coordinating among multiple stakeholders. LLMs can streamline this process by analyzing everyone’s availability and preferences and propose a few times to all parties, alleviating a lot of the usual back-and-forth.

Many routine cognitive tasks are being automated, not to replace our jobs but to enhance us as people. By offloading these routine tasks onto AI, we can focus on our strengths: empathy driven decision-making, creative drive, ethical considerations, intent, will, and long-term strategic decision-making. The automation of routine cognitive tasks represents not an obsolescence of human capability but an extension of it, increasing our own agency and autonomy, both mentally and temporally.

The Past and the Future

As we look back at the impact that machines have had on human life, from basic hand tools of early civilizations to the complex AI-driven solutions of today, we begin to recognize a pattern. Every era, characterized by the tools and technologies that they employed, paved the way for the next, with each phase being an enhancement, not a replacement.

AI’s role is shifting from prompt-based automation to intelligent autonomous agents that can research, analyze, predict, carry out tasks, and advise. As I mentioned in the Richly Imagined Future project, autonomous organizations are no longer a distant dream but a tangible reality.

Atomic AI Agent Framework

This model is intended to be both a conceptual and practical tool, aiding in system design, anticipating agent behavior and associated social and ethical dynamics, as well as offering a vision for future applications of AI.

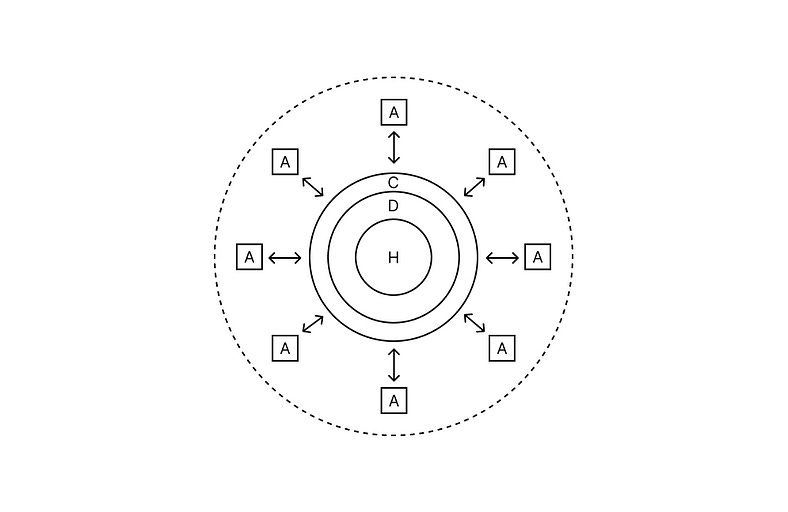

The structures of our emerging relationship with AI can be imagined as an atomic structure. At the core of the atom is the human, analogous to the nucleus. Surrounding this nucleus, AI agents come to life like electrons, dynamically appearing and vanishing based on needs and tasks. This singular interplay of human, agent, data, and memory then represents the Atom, whereas the ring of agents itself is the agent cloud, analogous to the electron cloud.

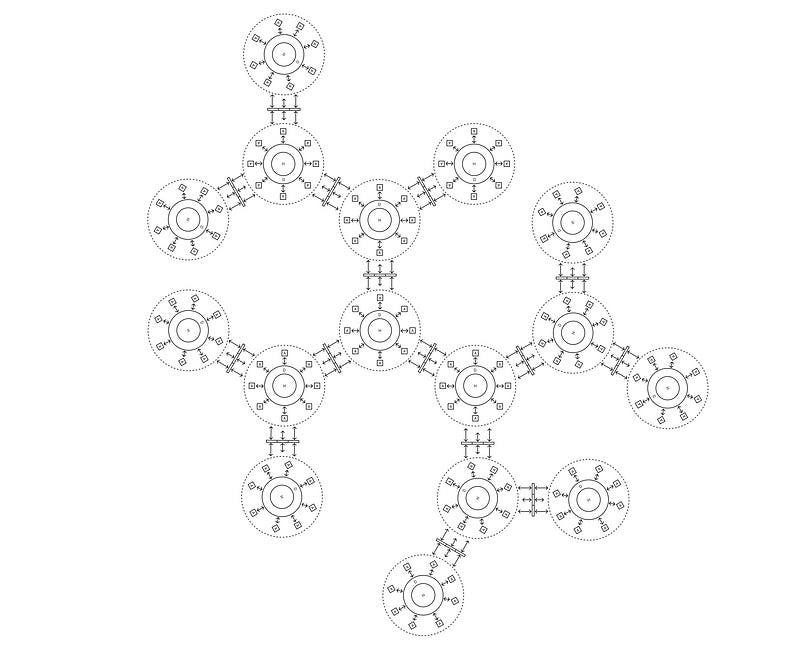

When two such Atoms come into contact, a Dyad emerges, characterized by the shared operations and interactions of their respective clouds of agents. As these relationships become more complex, we see the birth of ‘Molecules’ and eventually ‘Organisms’, representing intricate networks of interdependent Atoms.

The atomic AI agent cloud framework visualizes how humans and AI agents may interact in the future. By understanding the base ‘Atom’, we can anticipate behavior in larger groupings like ‘Dyads’ and ‘Molecules’.

The Anatomy of the AI Agent Cloud

The Human Core

At the center of the Atom is the individual person, the primary element. We constantly interact with the external world, absorbing information from various sources like social media, books, conversations, and experiences, and writing data with our actions, decisions, and creations. We don’t need to capture more data than we do today, but we must aim to control the permeability of this layer between human reality and our collected and stored data.

The Data Layer

Surrounding the human core is the data layer, a dynamic layer that is accumulated over time as users provide data or link accounts together. This layer is strictly metaphorical, in the sense that it is not a data lake, it is a theoretical region, one that supports separate threads or compartmentalized data per agent, per use case.

The Context Layer

Around the data layer is the Context Layer, a conceptual layer that shifts in real-time, organizing and presenting data that is immediately relevant to each agent’s specific task. It’s a dynamic vector store where context is continuously retrieved, structured, and managed. This ensures that the agents engage with information that is not only up-to-date but also specifically curated for the task at hand, improving the effectiveness and relevance of the agent’s functions.

The Agent Cloud

Beyond the context layer lies the Agent Cloud, a sophisticated network of AI-powered autonomous agents, each specialized in specific tasks. These agents continuously interact with the data layer, pulling context and chaining prompts to perform specialized functions.

Together, these agents form the agent cloud, a membrane between the human core and the vast amounts of information we deal with daily. They handle a wide range of tasks, making life less demanding and more aligned with our choices and needs.

Core Concepts

Dynamic Data Stream

The surrounding data is a stream that flows, changes, and adapts. It incorporates continuous inputs from the user’s experiences, agent memories, recent interactions, and other contextually relevant data. This stream also ensures synchronization between the human core and the Agent Cloud, providing real-time updates that reflect any new developments in one’s life.

Adaptive Prioritization

The Agent Cloud recognizes and responds to the relevance and urgency of tasks as they arise. Whether it’s an unplanned business trip or an unexpected family event, these agents quickly adjust their priorities.

Cumulative Learning with Memory

Agents compound their knowledge over time, refining strategies based on previous experiences. A pivotal component of this learning is the agent’s memory, which allows them to avoid repeating mistakes and build on successful strategies. By incorporating principles like those from the paper on Violation of Experience by PlasticLabs, agents can better understand the importance of aligning actions with past experiences and user preferences.

Inter-Agent Collaboration

At the heart of the Atom is the seamless collaboration between agents. These agents aren’t isolated entities; they function as part of a collective. They share information, strategies, and insights, ensuring that tasks are handled efficiently. For instance, if one agent identifies a user’s interest in cooking Italian cuisine, another agent responsible for shopping can procure ingredients for an Italian dish. It’s a series of collaborations where agents complement each other’s functions.

Future 1: Atomic Agent Cloud

Avery’s day began by listening to a concise summary of the news, key events, meetings, and tasks for the day with context drawn from her news, and social feeds and notifications, as well as her day’s schedule.

On her way to work, Avery received a message from old friends, inviting her for a spontaneous trip over the weekend. Before she could even start wondering about the logistics, her travel planning agent had already analyzed the options. Considering her preferences, recent conversations, and past travel experiences, the agent presented Avery with a suggested itinerary and even incorporated potential impacts on her finances and upcoming plans.

While at work, Avery faced a deluge of emails from various teams, but her communication agent quickly sifted through them all, summarizing key points into a concise brief, ensuring she didn’t miss any key updates. Later, as she prepped for her team’s weekly meeting, her meeting agent had already organized the context of the last meetings, setting a tentative agenda and even pulling up relevant pre-read materials.

Post work, while contemplating attending an evening workshop, her schedule agent displayed the potential clashes and solutions. It even highlighted a few colleagues who were interested, a nudge from her networking agent.

In the evening, as Avery thought about dinner, her recipe manager agent suggested a few dishes from her favorite recipe book. Cross-referencing with her food inventory manager, it ensured she had most of the ingredients at hand. However, for a particular Italian dish she was leaning towards, her shopping manager highlighted a few ingredients she was short of and offered to place an order or schedule a shopping reminder.

This collaboration between her agents ensured that tasks were simplified, leaving her with just a few streamlined decisions to make. These decisions then activated a series of automated actions, alleviating the mental clutter often associated with daily chores.

The Dyad

A Dyad is a structured, secure, and dynamic relationship between two atoms, designed to facilitate a multitude of tasks and objectives for the individuals they represent.

Anatomy of the Dyad

Unified Interaction Space

This will serve as the primary communication channel for the two agent clouds. All real-time communications, from initial negotiation to ongoing interaction, can happen here. This unified chat approach minimizes overhead and keeps the interaction straightforward.

Shared Context Database (RAG-enabled Data Repository)

This temporary, sandboxed vector database allows agents to pull contextually relevant data directly into their decisions and interactions. For the conversation’s requirements, this data is readily available and indexed for quick retrieval. Any more extensive datasets or resources that are required can also be pulled in and temporarily housed here. This data is then made accessible to the agents for their interactions via retrieval augmented generations.

Interaction Protocol

This is a set of rules and guidelines that dictate how the agents from both clouds can interact, make decisions, and negotiate. These mechanisms enforce consent and privacy, prioritizing human agency by enforcing strict permissions on data sharing and requiring explicit permissions and human decisions / approval for any meaningful actions.

A clear definition of what constitutes a ‘meaningful action’ then becomes necessary, and while I can’t offer one, I can say at least that the decision of clicking a button on a website to read the contents of a page wouldn’t be a ‘meaningful action’, but ordering something on your behalf (like spend access) would certainly be.

Event Log

A chronological and auditable record of interactions, decisions, and changes made during the life of the Dyad.

Core Concepts

Feedback Loop

A mechanism for each Agent Cloud to evaluate the success of the interaction, and to learn from it for future Dyads. I’ve mentioned VoE as a good example of the proven frameworks available for autonomous self-improvement based on interaction feedback.

Memory Weightage

Assigns importance scores to memories based on factors such as emotional significance, frequency of interaction, or potential future benefits. Memories with higher weightage are prioritized and retained, ensuring that the Agent Cloud’s storage remains efficient and relevant.

Future Interaction Potential

Evaluates the likelihood of repeated interactions with an entity. For instance, if the Agent Cloud predicts that you’ll interact with a specific colleague or friend more often, the information from past interactions with that individual might be stored for a more extended period, whereas if it’s a standard, anonymous, and ephemeral interaction then it might assign a lower significance to the future interaction potential.

This helps to avoid the agent memory bloating with every single interaction you’ve had, but also helps it pay more attention to those whom you’ve just met but are likely to meet again, like a first meeting with a new colleague.

Retention Review

Periodic evaluation of stored memories. Memories whose relevance has diminished might be compressed, archived or discarded, ensuring the Agent Cloud’s storage stays light.

Memory Transparency & User Pruning

An essential aspect. Users can manually prioritize certain memories for retention or deletion, ensuring they remain in control of what their Agent Cloud remembers.

Phases: Interactions Between Two Agent Clouds

Initiation & Data Handshake: This phase involves two agent dyads becoming aware of each other’s presence and engaging in an initial data exchange. They share contextual information about their respective users without compromising private data. This sets the stage for the communication between the two humans

Alignment & Objective Setting: The agent dyads collaborate to identify common goals, interests, and objectives of the upcoming interaction. This could be setting the agenda for a business meeting, understanding the learning goals of a student, or gauging the cultural nuances to be addressed in a cross-cultural conversation.

Intelligent Context Layering: Here, the agents assess and superimpose layers of context onto the interaction. The decisions to pull in data should align to user settings, and data layers imported into the temporary interaction window could be as broad as general trends or as specific as the meeting minutes of a specific meeting a few months ago. It’s all about making the interaction richer and more meaningful by adding layers of information that might be of interest, as the conversation unfolds.

Real-time Facilitation: Here, the agent dyads actively assist during the live interaction. This involves tasks like real-time translation, instant recall of past interactions or relevant information.

Feedback & Learning: Post-interaction, individual agents analyze the conversation’s outcomes against their set objectives, hypotheses and expectations. They learn from any discrepancies, updating their mental models for future interactions.

Molecule

If the Dyad represents the future of interpersonal interactions in a digital-first world, then the Molecule extends this concept to group dynamics. The Molecule is a complex, multi-faceted relationship among multiple Agent Clouds, encapsulating small friend groups, teams, small companies, or even nonprofits. In the same way that atoms form molecules in the physical world, individual Agent Clouds connect to form Molecules in the digital realm.

Note: the concepts going forward are less technical and more conceptual as we reach outside of the bounds of what I can imagine

Structural Components of a Molecule

Collective Context Layer

In a Molecule, the first structural element is the collective context layer. This layer aggregates essential, non-intrusive data from each individual Agent Cloud involved. This aggregated data provides a comprehensive snapshot, offering insights that guide the actions of the Molecule.

Group Priority Mapping

After forming a collective data layer, the Molecule undergoes a “Group Priority Mapping.” This step involves analyzing the goals, priorities, and preferences of all the participating Agent Clouds to formulate a collective set of objectives and actionable tasks.

Governance Protocol

Every Molecule has a governance protocol that determines how decisions are made, how tasks are allocated, and how conflicts are resolved. This ensures a balanced and fair interaction among all the constituent Agent Clouds.

Molecular Processes

Each agent scans the collective data layer to extract information relevant to its specific tasks or goals.

Agents maintain data integrity and privacy by only accessing or modifying data for which they have explicit permission.

Agents continuously update the collective data layer with new information, allowing the Molecule to adapt dynamically.

Agents analyze data not just for content but also for context, considering the source, timing, and relational aspects to other data.

Transparent Ledger

An open yet secure log that captures all interactions, transactions, and decisions made within the organism, providing a history and accountability layer.

Tasks Handled by a Molecule

Project Management — Coordinating timelines, milestones, and tasks among multiple participants to achieve a common goal throughout multiple projects.

Delegation & Resource Allocation — Identifying and distributing resources like allocating workloads, expertise, or assets to fulfill group objectives.

Molecule Examples

Family Units

A small family could function as a molecule. Each family member’s Agent Cloud could coordinate to manage household chores, family outings, meal plans, and so forth.

Book Clubs

A book club where everyone reads the same book and meets once a month could also be a Molecule. The agents could decide on a book, set reminders for reading targets, and arrange meetings.

Nonprofit Organizations

A small nonprofit focused on community aid could represent a molecule, where tasks like fundraising, event planning, and volunteer coordination are managed.

Organisms: Inter-Molecule Community Networks

Just as individual Agent Clouds engage in Dyads, Molecules might also interact. The organism could represent a molecule’s interactions with other molecules, negotiating and establishing links, adding another layer of complexity to the system.

Anatomy of an Organism

Clustered Vector Pools

Clustered Vector Pools would be regions within the Organism where similar or related Vectors from various Molecules converge, facilitating easier access and reference for common tasks.

Network Alignment

Network alignment would be necessary to serve as the Organism’s communication backbone, ensuring seamless alignment among all Agent Clouds. They could propagate directives and policies to every Agent Cloud, enable high priority message broadcasts for urgent notifications, and maintain a persistent ping mechanism for updates. They might even need to conduct periodic alignment checks to ensure Agent Clouds are synchronized with the Organism’s latest knowledge and utilize feedback loops to gauge and refine the effectiveness of communicated directives and updates.

Boundary Sensors

The Organism can have specialized boundary sensors at its periphery that detect external stimuli or changes in the environment, triggering responses or adaptations within the Organism.

Ensuring Alignment

The Agent Cloud concept brings with it many questions. It’s not necessarily a proposal, but something of an anticipated future. One of the goals of anticipating downstream futures is to identify risks and potentially detrimental outcomes in order to help guide the design of these systems. Thinking big, and dreaming of optimistic futures is crucial to building a future we want to live in, but we must do that while avoiding poorly designed technological infrastructure.

The augmentation of individual capabilities through AI agents requires a strong and tuned moral compass; when AI magnifies human intent, both benign and malicious, the ethical dimensions multiply.

Drawing from “Dynamic Future-Proofing,” we have to ask, now even more than before: Who holds agency over these agents? What mechanisms deter misuse? How do we manage consent and privacy? How do we keep those protections at the forefront of the tech? This future calls for a definition of the moral architecture that should steer semi-autonomous agents.

User control and alignment with personal values are key considerations. An essential aspect of this control is the ability for users to define and fine-tune how their AI agents operate, ensuring that the dynamic is that of machine assistance driven by human intent and decision making. Violation of Expectation (VoE) and metacognitive prompting, concepts and methodologies illustrated by Leer, Trost, and Voruganti at PlasticLabs, would contribute in this regard. Further granular settings should offer control over data sharing, contextual operations, and task-specific consents.

The dynamic between AI agents and humans should be rooted in continual refinement and feedback. Transparent action logs and feedback loops are necessary to offer users a clear view into their agents’ activities, allowing for adjustments as needed, incorporating the processes and methods of explainable artificial intelligence (XAI) that enable people to understand and trust the operations and outputs of their LLMs and Agents.

The introduction of community-shared ethics and collaborative setting adjustments further paints a picture of a future where the collective wisdom of many aids individual users in sculpting their AI’s ethical and operational landscape.

As AI becomes a mainstream navigator in our lives, there’s a looming shadow — the risks of relying on models that have unintentionally absorbed the biases and inaccuracies of humans as filtered by the information available on the internet, which is itself heavily biased. This gap between an accurate, real world view and a biased one, is a gap that risks widening with further integration of AI systems without first addressing those inaccuracies to avoid leaving some behind in the wake of this rapid progress. Addressing this calls for AI ecosystems that are not just intelligent and capable, but also broadly accessible, ensuring technology uplifts everyone, not just a select few.

Conclusion

In our exploration of human-agent collaborations, from the foundational ‘Atom’ and collaborative ‘Dyad,’ to the complex ‘Organisms’, we’ve recognized the profound symbiosis between humans and machines. The vivid examples, spanning from day-to-day tasks like travel planning to complex endeavors such as business operations and delegation, depict a near future where the boundary between human endeavors and machine intelligence is seamless.

“The machine is financially and functionally autonomous and transactions are made on a H2H, M2H, and M2M basis”: These agents could potentially handle transactions in intra-agent operations between agents within a single agent cloud or inter-agent operations between agents of different clouds.

However, understanding this emerging landscape is just the beginning. It’s imperative to grasp the broader context of these developments. The trajectory we’re on doesn’t merely suggest iterative enhancements but hints at a paradigm shift in human-machine collaboration.

Strategic foresight is not about predicting the future but about preparing for multiple probable futures. Our current endeavors are indicators, signposts pointing towards these futures. By acknowledging and interpreting these signs, we position ourselves to design for these futures, and in doing so, actively shape them. It’s about having the audacity to envision a world where our digital counterparts don’t just complement, but amplify our capabilities.

Our journey through the Atom, Dyad, Molecule, and Organism has provided insights and frameworks. Yet, the real challenge lies ahead: to harness these insights, anticipate challenges and opportunities, and architect a future where our combined potential, both human and machine, is realized to its fullest extent, while keeping people at the center. It’s not just about solving problems, but enhancing human agency while protecting privacy and consent.

Consider this a call to action to anticipate this future, and begin designing and engineering the best path forward.

Appendix of Terms

Retrieval Augmented Generations (RAG): A method that integrates embedding models that transform text into vectors in multi-dimensional point space with knowledge retrieval mechanisms, allowing the model to pull in additional relevant context when generating responses to improve accuracy and relevance.

Vector Database: A database that stores data in the form of vectors, representing points in a multi-dimensional space. Such databases are optimized for high-speed similarity searches, making them useful for tasks like information retrieval or machine learning models.

Relevant Context: Pertinent information retrieved from embedding models during a RAG process, ensuring generated responses are accurate and contextually-aware, enhancing the model’s understanding and relevance to the query.

Intelligent Synchronization: The process by which various agents, systems, or databases update and align their information, ensuring that all entities have access to the most current and relevant data.

Dynamic Delegation: A method where tasks are assigned to agents based on their capabilities, availability, and the current context. This ensures efficient resource utilization and timely completion of tasks.

Agent: A digital entity or software designed to perform specific tasks autonomously.

Agent Cloud: A collection or network of agents that operate in tandem, sharing resources and information. They work collaboratively to handle complex tasks and decisions based on collective intelligence.

Atom: The foundational unit in the AI Agent Cloud concept, composed of the Human Core, Data Layer, and Agent Cloud.

Dyad: A complex unit formed when two Atoms interact, leading to the sharing of agents and data layers.

Molecule: An even more intricate structure that emerges from the interaction of multiple Dyads. This involves several humans, their respective data orbits, and an interconnected cloud of agents.

Dynamic Future-Proofing: A conceptual approach emphasizing the need to anticipate future developments and challenges, ensuring systems and strategies remain relevant and effective over time.

Decentralized Machine Economy: An economic structure wherein machines or digital entities have their own autonomy, driven by algorithms and devoid of centralized control, enabling novel human to human (H2H), machine to human (M2H) and machine to machine (M2M) relationships to emerge. (2018, Richly Imagined Future, P. Pagé).

Collective Data Layer: A shared repository of information accessible by multiple agents or entities within a network, fostering collaboration and informed decision-making.

H2H (Human-to-Human): Traditional interactions that take place directly between two or more humans, without the intervention of automated systems or digital agents.

M2M (Machine-to-Machine): Refers to direct communication between autonomous agents without human intervention. This can involve anything from two computers exchanging data to complex systems where multiple agents collaborate.

M2H (Machine-to-Human): Describes the interaction between machines (typically computational devices or software) and humans. This can be anything from a user interface on a computer to more advanced interactions involving AI or intelligent agents assisting human users.

Agent Cloud Atomic Model: A more advanced and intricate framework that emphasizes the collaboration and synergy between agents, data layers, and humans. Instead of viewing interactions in the binary terms of M2M or M2H, the Agents Model focuses on a dynamic and fluid exchange of information and tasks within a network of agents, their associated data layers, and the humans they help. This model facilitates more nuanced interactions, ensuring effective task delegation, learning, and adaptation based on changing contexts and needs.

2023: State of AI Agent Links — 1, 3, 4, 5, 6, 7, 8

Dynamic Future-Proofing: Pagé, P. (2021) In A. Manu (Ed.), Dynamic Future-Proofing: Integrating Disruption in Everyday Business (pp. 143–151). Emerald Publishing Limited.

https://books.emeraldinsight.com/page/detail/dynamic-future-proofing/?k=9781800435278

https://publish.obsidian.md/philippepage/Work/Agent+Clouds